Improve model card: Add pipeline tag, library name, and detailed inference/training

Browse filesThis PR significantly enhances the model card by adding relevant metadata and comprehensive content from the original GitHub repository.

Key improvements include:

- **Metadata**: Added `pipeline_tag: any-to-any` to correctly categorize the model and improve discoverability at `https://huggingface.co/models?pipeline_tag=any-to-any`. Added `library_name: transformers` based on `config.json` indicating `LlamaForCausalLM` architecture and `transformers_version`, which enables automated usage snippets on the model page.

- **Structure and Links**: Updated the model card title and added prominent badges at the top linking to the paper, project page, and GitHub repository for easy access.

- **Content Completeness**: Integrated the "Open-Source Checklist," "Chat model CLI Inference" instructions, and the detailed "Pretraining and SFT" section from the project's GitHub README, providing a more complete guide for users.

- **Typos**: Corrected the "Lincese" heading to "License".

These updates will improve the model's visibility, usability, and overall presentation on the Hugging Face Hub.

|

@@ -1,11 +1,24 @@

|

|

| 1 |

---

|

| 2 |

-

license: apache-2.0

|

| 3 |

language:

|

| 4 |

- en

|

|

|

|

|

|

|

|

|

|

| 5 |

---

|

| 6 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 7 |

|

| 8 |

## Introduction

|

|

|

|

| 9 |

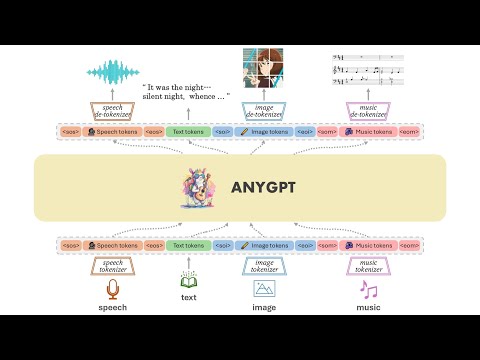

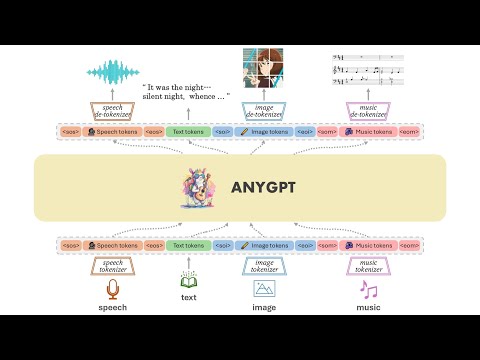

We introduce AnyGPT, an any-to-any multimodal language model that utilizes discrete representations for the unified processing of various modalities, including speech, text, images, and music. The [base model](https://huggingface.co/fnlp/AnyGPT-base) aligns the four modalities, allowing for intermodal conversions between different modalities and text. Furthermore, we constructed the [AnyInstruct](https://huggingface.co/datasets/fnlp/AnyInstruct) dataset based on various generative models, which contains instructions for arbitrary modal interconversion. Trained on this dataset, our [chat model](https://huggingface.co/fnlp/AnyGPT-chat) can engage in free multimodal conversations, where multimodal data can be inserted at will.

|

| 10 |

|

| 11 |

AnyGPT proposes a generative training scheme that converts all modal data into a unified discrete representation, using the Next Token Prediction task for unified training on a Large Language Model (LLM). From the perspective of 'compression is intelligence': when the quality of the Tokenizer is high enough, and the perplexity (PPL) of the LLM is low enough, it is possible to compress the vast amount of multimodal data on the internet into the same model, thereby emerging capabilities not present in a pure text-based LLM.

|

|

@@ -14,6 +27,12 @@ Demos are shown in [project page](https://junzhan2000.github.io/AnyGPT.github.io

|

|

| 14 |

## Example Demonstrations

|

| 15 |

[](https://www.youtube.com/watch?v=oW3E3pIsaRg)

|

| 16 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 17 |

|

| 18 |

## Inference

|

| 19 |

|

|

@@ -28,21 +47,22 @@ pip install -r requirements.txt

|

|

| 28 |

```

|

| 29 |

|

| 30 |

### Model Weights

|

|

|

|

| 31 |

* Check the AnyGPT-base weights in [fnlp/AnyGPT-base](https://huggingface.co/fnlp/AnyGPT-base)

|

| 32 |

* Check the AnyGPT-chat weights in [fnlp/AnyGPT-chat](https://huggingface.co/fnlp/AnyGPT-chat)

|

| 33 |

* Check the SpeechTokenizer and Soundstorm weights in [fnlp/AnyGPT-speech-modules](https://huggingface.co/fnlp/AnyGPT-speech-modules)

|

| 34 |

* Check the SEED tokenizer weights in [AILab-CVC/seed-tokenizer-2](https://huggingface.co/AILab-CVC/seed-tokenizer-2)

|

| 35 |

|

| 36 |

-

|

| 37 |

The SpeechTokenizer is used for tokenizing and reconstructing speech, Soundstorm is responsible for completing paralinguistic information, and SEED-tokenizer is used for tokenizing images.

|

| 38 |

|

| 39 |

The model weights of unCLIP SD-UNet which are used to reconstruct the image, and Encodec-32k which are used to tokenize and reconstruct music will be downloaded automatically.

|

| 40 |

|

| 41 |

### Base model CLI Inference

|

|

|

|

| 42 |

```bash

|

| 43 |

python anygpt/src/infer/cli_infer_base_model.py \

|

| 44 |

--model-name-or-path "path/to/AnyGPT-7B-base" \

|

| 45 |

-

--image-tokenizer-path

|

| 46 |

--speech-tokenizer-path "path/to/model" \

|

| 47 |

--speech-tokenizer-config "path/to/config" \

|

| 48 |

--soundstorm-path "path/to/model" \

|

|

@@ -50,6 +70,7 @@ python anygpt/src/infer/cli_infer_base_model.py \

|

|

| 50 |

```

|

| 51 |

|

| 52 |

for example

|

|

|

|

| 53 |

```bash

|

| 54 |

python anygpt/src/infer/cli_infer_base_model.py \

|

| 55 |

--model-name-or-path models/anygpt/base \

|

|

@@ -61,6 +82,7 @@ python anygpt/src/infer/cli_infer_base_model.py \

|

|

| 61 |

```

|

| 62 |

|

| 63 |

#### Interaction

|

|

|

|

| 64 |

The Base Model can perform various tasks, including text-to-image, image caption, Automatic Speech Recognition (ASR), Zero-shot Text-to-Speech (TTS), Text-to-Music, and Music Captioning.

|

| 65 |

|

| 66 |

We can perform inference following a specific instruction format.

|

|

@@ -68,71 +90,113 @@ We can perform inference following a specific instruction format.

|

|

| 68 |

* Text-to-Image

|

| 69 |

* ```text|image|{caption}```

|

| 70 |

* example:

|

| 71 |

-

|

| 72 |

* Image Caption

|

| 73 |

* ```image|text|{caption}```

|

| 74 |

* example:

|

| 75 |

-

|

| 76 |

* TTS(random voice)

|

| 77 |

* ```text|speech|{speech content}```

|

| 78 |

* example:

|

| 79 |

-

|

| 80 |

* Zero-shot TTS

|

| 81 |

* ```text|speech|{speech content}|{voice prompt}```

|

| 82 |

* example:

|

| 83 |

-

|

| 84 |

* ASR

|

| 85 |

* ```speech|text|{speech file path}```

|

| 86 |

* example: ```speech|text|AnyGPT/static/infer/speech/voice_prompt2.wav```

|

| 87 |

* Text-to-Music

|

| 88 |

* ```text|music|{caption}```

|

| 89 |

-

* example:

|

| 90 |

-

|

| 91 |

* Music Caption

|

| 92 |

* ```music|text|{music file path}```

|

| 93 |

* example: ```music|text|static/infer/music/features an indie rock sound with distinct element.wav```

|

| 94 |

|

| 95 |

**Notes**

|

| 96 |

|

| 97 |

-

For different tasks, we used different language model decoding strategies. The decoding configuration files for image, speech, and music generation are located in

|

| 98 |

|

| 99 |

Due to limitations in data and training resources, the model's generation may still be unstable. You can generate multiple times or try different decoding strategies.

|

| 100 |

|

| 101 |

-

The speech and music response will be saved to

|

| 102 |

|

| 103 |

-

###

|

| 104 |

-

#### Pretraining

|

| 105 |

|

| 106 |

-

|

| 107 |

-

|

| 108 |

-

|

| 109 |

-

|

| 110 |

-

|

| 111 |

-

|

| 112 |

-

|

| 113 |

-

|

| 114 |

-

|

| 115 |

|

| 116 |

-

|

| 117 |

|

| 118 |

-

|

| 119 |

-

|

| 120 |

-

|

| 121 |

-

|

| 122 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 123 |

|

| 124 |

-

|

| 125 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 126 |

|

| 127 |

## Acknowledgements

|

| 128 |

- [SpeechGPT](https://github.com/0nutation/SpeechGPT/tree/main/speechgpt), [Vicuna](https://github.com/lm-sys/FastChat): The codebase we built upon.

|

| 129 |

- We thank the great work from [SpeechTokenizer](https://github.com/ZhangXInFD/SpeechTokenizer),[soundstorm-speechtokenizer](https://github.com/ZhangXInFD/soundstorm-speechtokenizer), [SEED-tokenizer](https://github.com/AILab-CVC/SEED),

|

| 130 |

|

| 131 |

-

##

|

| 132 |

`AnyGPT` is released under the original [License](https://ai.meta.com/resources/models-and-libraries/llama-downloads/) of [LLaMA2](https://huggingface.co/meta-llama/Llama-2-13b-chat-hf).

|

| 133 |

|

| 134 |

## Citation

|

| 135 |

If you find AnyGPT and AnyInstruct useful in your research or applications, please kindly cite:

|

|

|

|

| 136 |

```

|

| 137 |

@article{zhan2024anygpt,

|

| 138 |

title={AnyGPT: Unified Multimodal LLM with Discrete Sequence Modeling},

|

|

|

|

| 1 |

---

|

|

|

|

| 2 |

language:

|

| 3 |

- en

|

| 4 |

+

license: apache-2.0

|

| 5 |

+

pipeline_tag: any-to-any

|

| 6 |

+

library_name: transformers

|

| 7 |

---

|

| 8 |

+

|

| 9 |

+

# AnyGPT: Unified Multimodal LLM with Discrete Sequence Modeling

|

| 10 |

+

|

| 11 |

+

[](https://junzhan2000.github.io/AnyGPT.github.io/)

|

| 12 |

+

[](https://arxiv.org/pdf/2402.12226.pdf)

|

| 13 |

+

[](https://github.com/OpenMOSS/AnyGPT)

|

| 14 |

+

[](https://huggingface.co/datasets/fnlp/AnyInstruct)

|

| 15 |

+

|

| 16 |

+

<p align="center">

|

| 17 |

+

<img src="https://github.com/OpenMOSS/AnyGPT/raw/main/static/images/logo.png" width="16%"> <br>

|

| 18 |

+

</p>

|

| 19 |

|

| 20 |

## Introduction

|

| 21 |

+

|

| 22 |

We introduce AnyGPT, an any-to-any multimodal language model that utilizes discrete representations for the unified processing of various modalities, including speech, text, images, and music. The [base model](https://huggingface.co/fnlp/AnyGPT-base) aligns the four modalities, allowing for intermodal conversions between different modalities and text. Furthermore, we constructed the [AnyInstruct](https://huggingface.co/datasets/fnlp/AnyInstruct) dataset based on various generative models, which contains instructions for arbitrary modal interconversion. Trained on this dataset, our [chat model](https://huggingface.co/fnlp/AnyGPT-chat) can engage in free multimodal conversations, where multimodal data can be inserted at will.

|

| 23 |

|

| 24 |

AnyGPT proposes a generative training scheme that converts all modal data into a unified discrete representation, using the Next Token Prediction task for unified training on a Large Language Model (LLM). From the perspective of 'compression is intelligence': when the quality of the Tokenizer is high enough, and the perplexity (PPL) of the LLM is low enough, it is possible to compress the vast amount of multimodal data on the internet into the same model, thereby emerging capabilities not present in a pure text-based LLM.

|

|

|

|

| 27 |

## Example Demonstrations

|

| 28 |

[](https://www.youtube.com/watch?v=oW3E3pIsaRg)

|

| 29 |

|

| 30 |

+

## Open-Source Checklist

|

| 31 |

+

|

| 32 |

+

- [X] Base Model

|

| 33 |

+

- [X] Chat Model

|

| 34 |

+

- [X] Inference Code

|

| 35 |

+

- [X] Instruction Dataset

|

| 36 |

|

| 37 |

## Inference

|

| 38 |

|

|

|

|

| 47 |

```

|

| 48 |

|

| 49 |

### Model Weights

|

| 50 |

+

|

| 51 |

* Check the AnyGPT-base weights in [fnlp/AnyGPT-base](https://huggingface.co/fnlp/AnyGPT-base)

|

| 52 |

* Check the AnyGPT-chat weights in [fnlp/AnyGPT-chat](https://huggingface.co/fnlp/AnyGPT-chat)

|

| 53 |

* Check the SpeechTokenizer and Soundstorm weights in [fnlp/AnyGPT-speech-modules](https://huggingface.co/fnlp/AnyGPT-speech-modules)

|

| 54 |

* Check the SEED tokenizer weights in [AILab-CVC/seed-tokenizer-2](https://huggingface.co/AILab-CVC/seed-tokenizer-2)

|

| 55 |

|

|

|

|

| 56 |

The SpeechTokenizer is used for tokenizing and reconstructing speech, Soundstorm is responsible for completing paralinguistic information, and SEED-tokenizer is used for tokenizing images.

|

| 57 |

|

| 58 |

The model weights of unCLIP SD-UNet which are used to reconstruct the image, and Encodec-32k which are used to tokenize and reconstruct music will be downloaded automatically.

|

| 59 |

|

| 60 |

### Base model CLI Inference

|

| 61 |

+

|

| 62 |

```bash

|

| 63 |

python anygpt/src/infer/cli_infer_base_model.py \

|

| 64 |

--model-name-or-path "path/to/AnyGPT-7B-base" \

|

| 65 |

+

--image-tokenizer-path 'path/to/model' \

|

| 66 |

--speech-tokenizer-path "path/to/model" \

|

| 67 |

--speech-tokenizer-config "path/to/config" \

|

| 68 |

--soundstorm-path "path/to/model" \

|

|

|

|

| 70 |

```

|

| 71 |

|

| 72 |

for example

|

| 73 |

+

|

| 74 |

```bash

|

| 75 |

python anygpt/src/infer/cli_infer_base_model.py \

|

| 76 |

--model-name-or-path models/anygpt/base \

|

|

|

|

| 82 |

```

|

| 83 |

|

| 84 |

#### Interaction

|

| 85 |

+

|

| 86 |

The Base Model can perform various tasks, including text-to-image, image caption, Automatic Speech Recognition (ASR), Zero-shot Text-to-Speech (TTS), Text-to-Music, and Music Captioning.

|

| 87 |

|

| 88 |

We can perform inference following a specific instruction format.

|

|

|

|

| 90 |

* Text-to-Image

|

| 91 |

* ```text|image|{caption}```

|

| 92 |

* example:

|

| 93 |

+

```text|image|A bustling medieval market scene with vendors selling exotic goods under colorful tents```

|

| 94 |

* Image Caption

|

| 95 |

* ```image|text|{caption}```

|

| 96 |

* example:

|

| 97 |

+

```image|text|static/infer/image/cat.jpg```

|

| 98 |

* TTS(random voice)

|

| 99 |

* ```text|speech|{speech content}```

|

| 100 |

* example:

|

| 101 |

+

```text|speech|I could be bounded in a nutshell and count myself a king of infinite space.```

|

| 102 |

* Zero-shot TTS

|

| 103 |

* ```text|speech|{speech content}|{voice prompt}```

|

| 104 |

* example:

|

| 105 |

+

```text|speech|I could be bounded in a nutshell and count myself a king of infinite space.|static/infer/speech/voice_prompt3.wav```

|

| 106 |

* ASR

|

| 107 |

* ```speech|text|{speech file path}```

|

| 108 |

* example: ```speech|text|AnyGPT/static/infer/speech/voice_prompt2.wav```

|

| 109 |

* Text-to-Music

|

| 110 |

* ```text|music|{caption}```

|

| 111 |

+

* example:

|

| 112 |

+

```text|music|features an indie rock sound with distinct elements that evoke a dreamy, soothing atmosphere```

|

| 113 |

* Music Caption

|

| 114 |

* ```music|text|{music file path}```

|

| 115 |

* example: ```music|text|static/infer/music/features an indie rock sound with distinct element.wav```

|

| 116 |

|

| 117 |

**Notes**

|

| 118 |

|

| 119 |

+

For different tasks, we used different language model decoding strategies. The decoding configuration files for image, speech, and music generation are located in ``config/image_generate_config.json``, ``config/speech_generate_config.json``, and ``config/music_generate_config.json``, respectively. The decoding configuration files for other modalities to text are in ``config/text_generate_config.json``. You can directly modify or add parameters to change the decoding strategy.

|

| 120 |

|

| 121 |

Due to limitations in data and training resources, the model's generation may still be unstable. You can generate multiple times or try different decoding strategies.

|

| 122 |

|

| 123 |

+

The speech and music response will be saved to ``.wav`` files, and the image response will be saved to a ``jpg``. The filename will be a concatenation of the prompt and the time. The paths to these files will be indicated in the response.

|

| 124 |

|

| 125 |

+

### Chat model CLI Inference

|

|

|

|

| 126 |

|

| 127 |

+

```bash

|

| 128 |

+

python anygpt/src/infer/cli_infer_chat_model.py \

|

| 129 |

+

--model-name-or-path 'path/to/model' \

|

| 130 |

+

--image-tokenizer-path 'path/to/model' \

|

| 131 |

+

--speech-tokenizer-path 'path/to/model' \

|

| 132 |

+

--speech-tokenizer-config 'path/to/config' \

|

| 133 |

+

--soundstorm-path 'path/to/model' \

|

| 134 |

+

--output-dir "infer_output/chat"

|

| 135 |

+

```

|

| 136 |

|

| 137 |

+

for example

|

| 138 |

|

| 139 |

+

```bash

|

| 140 |

+

python anygpt/src/infer/cli_infer_chat_model.py \

|

| 141 |

+

--model-name-or-path models/anygpt/chat \

|

| 142 |

+

--image-tokenizer-path models/seed-tokenizer-2/seed_quantizer.pt \

|

| 143 |

+

--speech-tokenizer-path models/speechtokenizer/ckpt.dev \

|

| 144 |

+

--speech-tokenizer-config models/speechtokenizer/config.json \

|

| 145 |

+

--soundstorm-path models/soundstorm/speechtokenizer_soundstorm_mls.pt \

|

| 146 |

+

--output-dir "infer_output/chat"

|

| 147 |

+

```

|

| 148 |

+

|

| 149 |

+

Instruct format

|

| 150 |

+

|

| 151 |

+

```bash

|

| 152 |

+

interleaved|{text_instruction}|{modality}|{image_path}|{voice_prompt}|{speech_instruction}|{music_path}

|

| 153 |

+

```

|

| 154 |

+

|

| 155 |

+

Where ``text_instruction`` is the input text command, ``speech_instruction`` is the input voice command; only one needs to be specified.

|

| 156 |

|

| 157 |

+

``image_path`` and ``music_path`` are the paths for the input image and music, respectively. ``voice_prompt`` is the specified tone of the model's response; if not specified, a random tone is used.

|

| 158 |

|

| 159 |

+

``modality`` refers to the type of output modality, which can be chosen as speech, image, or music; otherwise, it is considered as text. This will only affect which decoding configuration file under the config directory is used by the model (this is because the model's training is limited, leading to different decoding strategies for different modalities). It can also decode token by token, modifying the decoding strategy to the corresponding modality when generating the start token of the modality.

|

| 160 |

+

|

| 161 |

+

**example**

|

| 162 |

+

|

| 163 |

+

* interleaved||image|||static/infer/speech/instruction/Can you draw me a picture of a sunny beach.wav

|

| 164 |

+

* interleaved||music|||static/infer/speech/instruction/Give me a similar style of music.wav

|

| 165 |

+

|

| 166 |

+

To clear the conversation history, please input ``|clear``

|

| 167 |

+

|

| 168 |

+

### Pretraining and SFT

|

| 169 |

+

|

| 170 |

+

Please refer to ``scripts/stage1_pretrain.sh`` and ``scripts/stage2_sft.sh``

|

| 171 |

+

|

| 172 |

+

We provide training data samples for reference. The organization of training formats includes pre-training data in [data/pretrain](https://github.com/OpenMOSS/AnyGPT/tree/main/data/pretrain) and instruction data in [data/instruction](https://github.com/OpenMOSS/AnyGPT/tree/main/data/instruction).

|

| 173 |

+

For prompts of different tasks, refer to [task_prompts](https://github.com/OpenMOSS/AnyGPT/blob/16210f829d3b1aa25b0057ebbab0a78057fb59b5/anygpt/src/m_utils/prompter.py#L19), such as plain text dialogue, voice command text reply, text command voice reply, and special prompts for various tasks. You need to process multi-modal data into multi-round dialogue format according to the task template in advance.

|

| 174 |

+

We use a voice conversation as an example in the command data, corresponding to the use of task_prompts in the "Speech-Instruction" and "Speech-Response":

|

| 175 |

+

|

| 176 |

+

```json

|

| 177 |

+

[

|

| 178 |

+

{

|

| 179 |

+

"role": "user",

|

| 180 |

+

"message": "<sosp><🗣️1><🗣️1><🗣️1><eosp> Please acknowledge the user's vocal input, create a textual response"

|

| 181 |

+

},

|

| 182 |

+

{

|

| 183 |

+

"role": "assistant",

|

| 184 |

+

"message": "<-Ins-> hello, how are you

|

| 185 |

+

<-Res-> I am fine, thank you <sosp><🗣️2><🗣️2><🗣️2><eosp>"

|

| 186 |

+

}

|

| 187 |

+

]

|

| 188 |

+

```

|

| 189 |

|

| 190 |

## Acknowledgements

|

| 191 |

- [SpeechGPT](https://github.com/0nutation/SpeechGPT/tree/main/speechgpt), [Vicuna](https://github.com/lm-sys/FastChat): The codebase we built upon.

|

| 192 |

- We thank the great work from [SpeechTokenizer](https://github.com/ZhangXInFD/SpeechTokenizer),[soundstorm-speechtokenizer](https://github.com/ZhangXInFD/soundstorm-speechtokenizer), [SEED-tokenizer](https://github.com/AILab-CVC/SEED),

|

| 193 |

|

| 194 |

+

## License

|

| 195 |

`AnyGPT` is released under the original [License](https://ai.meta.com/resources/models-and-libraries/llama-downloads/) of [LLaMA2](https://huggingface.co/meta-llama/Llama-2-13b-chat-hf).

|

| 196 |

|

| 197 |

## Citation

|

| 198 |

If you find AnyGPT and AnyInstruct useful in your research or applications, please kindly cite:

|

| 199 |

+

|

| 200 |

```

|

| 201 |

@article{zhan2024anygpt,

|

| 202 |

title={AnyGPT: Unified Multimodal LLM with Discrete Sequence Modeling},

|