FictionBert

This model was finetuned with Unsloth.

based on Alibaba-NLP/gte-modernbert-base

based on Alibaba-NLP/gte-modernbert-base

This is a sentence-transformers model finetuned from Alibaba-NLP/gte-modernbert-base. It maps sentences & paragraphs to a 768-dimensional dense vector space and can be used for semantic textual similarity, semantic search, paraphrase mining, text classification, clustering, and more.

This model is finetuned specifically for fiction retrieval. It's been trained on sci-fi, fantasy, mystery, and other fiction genres.

Dataset size: 800k rows based on 100% manually cleaned data.

This model surpasses Qwen3 4B embedding model on my test split benchmark (40k examples with hard negatives) by 0.5%.

Model accuracy increased from 90.8% to 95.7% on the test split.

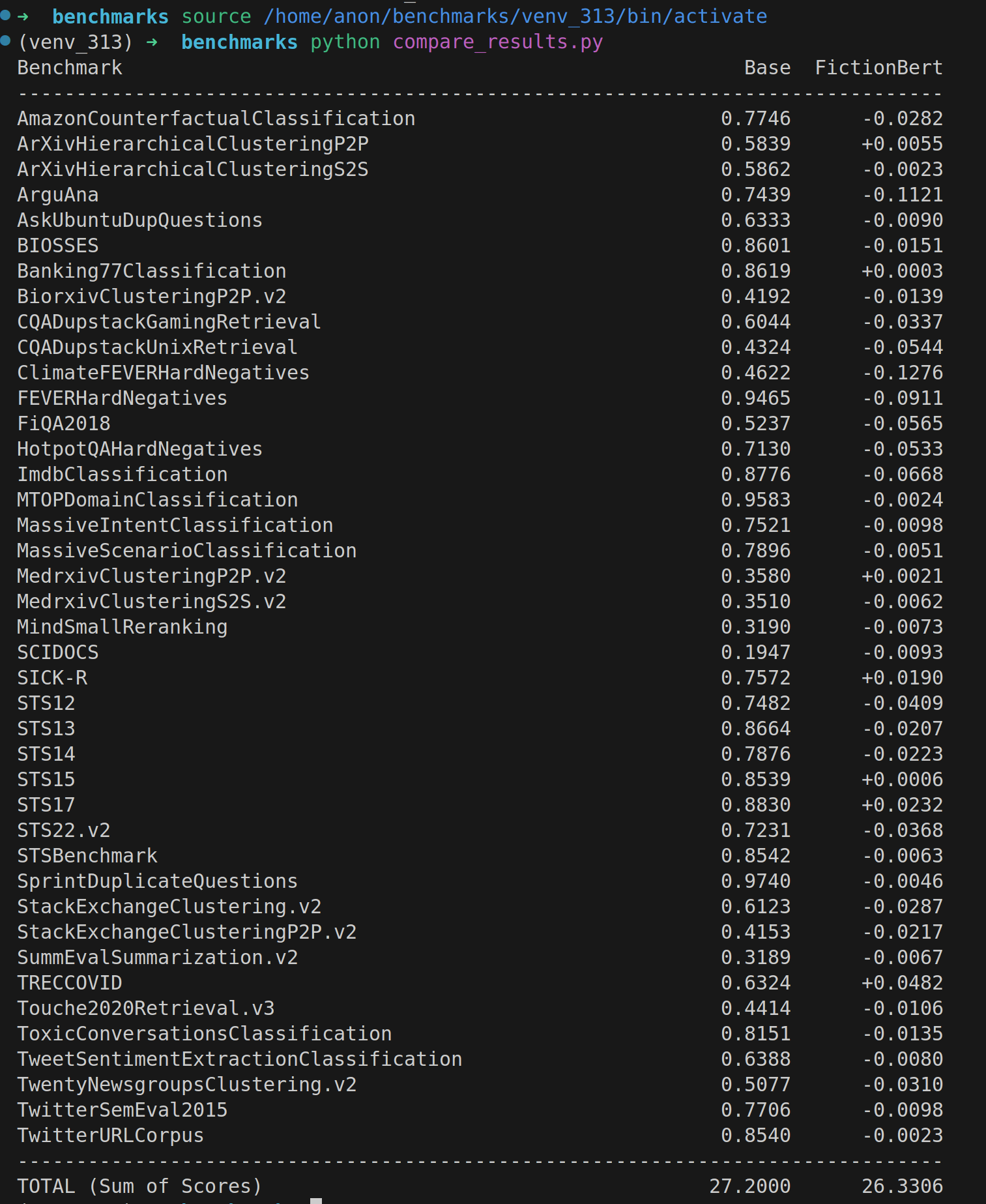

Some MTEB benchmarks saw some pretty big losses, they're detailed below.

I did plenty of runs with this model from rank 1 on up, but all of them damaged other benchmarks similarly.

Benchmark comparison, base model vs. this one

There are plenty of benchmark regressions with this model, but a couple gains.

Model Details

Model Description

- Model Type: Sentence Transformer

- Base model: Alibaba-NLP/gte-modernbert-base

- Maximum Sequence Length: 512 tokens

- Output Dimensionality: 768 dimensions

- Similarity Function: Cosine Similarity

- Training Dataset:

- json

Model Sources

- Documentation: Sentence Transformers Documentation

- Repository: Sentence Transformers on GitHub

- Hugging Face: Sentence Transformers on Hugging Face

Full Model Architecture

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False, 'architecture': 'PeftModelForFeatureExtraction'})

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': True, 'pooling_mode_mean_tokens': False, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False, 'include_prompt': True})

)

Usage

Direct Usage (Sentence Transformers)

First install the Sentence Transformers library:

pip install -U sentence-transformers

Then you can load this model and run inference.

from sentence_transformers import SentenceTransformer

# Download from the 🤗 Hub

model = SentenceTransformer("sentence_transformers_model_id")

# Run inference

sentences = [

'A being associated with malevolent influence must exhibit tangible signs of corruption, while a person disregarded by others despite a legitimate claim to priority quietly calculates a missed academic obligation, realizing that certain values surpass academic perfection.',

"Surely a demon-caller must show some outward manifestation of that kind of evil. Norman pushed into the first available seat. His injured hand should've entitled him to one the moment he got on the bus but not one of his selfish, self-centered fellow students would get up although he'd glared at all and sundry. Still sulking, he fished his calculator out of his shirt pocket, and began to work out the time he'd need to spend downtown. He was, at that very moment, missing an analytical geometry class. It was the first class he'd ever skipped. His parents would have fits. He didn't care. As much as he'd hoarded every A and A plus---he had a complete record of every mark he'd ever received---he'd realized in the last couple of days that some things were more important.",

'It was one of the things he liked best about this part of the city, the fact that it never really slept, and it was why he had his home as close to it as he could get. Two blocks past Yonge, he turned into a circular drive and followed the curve around to the door of his building. In his time, he had lived in castles of every description, a fair number of very private country estates, and even a crypt or two when times were bad, but it had been centuries since he\'d had a home that suited him as well as the condominium he\'d bought in the heart of Toronto. "Good evening, Mr. Fitzroy." "Evening, Greg. Anything happening?" The security guard smiled and reached for the door release.',

]

embeddings = model.encode(sentences)

print(embeddings.shape)

# [3, 768]

# Get the similarity scores for the embeddings

similarities = model.similarity(embeddings, embeddings)

print(similarities)

# tensor([[ 1.0000, 0.4737, -0.0624],

# [ 0.4737, 1.0000, 0.0190],

# [-0.0624, 0.0190, 1.0000]])

Training Details

- Loss:

MultipleNegativesRankingLosswith these parameters:{ "scale": 20.0, "similarity_fct": "cos_sim", "gather_across_devices": false }

Training Hyperparameters

Non-Default Hyperparameters

per_device_train_batch_size: 500learning_rate: 6e-06weight_decay: 0.01lr_scheduler_type: cosine_with_restartswarmup_steps: 100bf16: True

All Hyperparameters

Click to expand

overwrite_output_dir: Falsedo_predict: Falseeval_strategy: noprediction_loss_only: Trueper_device_train_batch_size: 500per_device_eval_batch_size: 8per_gpu_train_batch_size: Noneper_gpu_eval_batch_size: Nonegradient_accumulation_steps: 1eval_accumulation_steps: Nonetorch_empty_cache_steps: Nonelearning_rate: 6e-06weight_decay: 0.01adam_beta1: 0.9adam_beta2: 0.999adam_epsilon: 1e-08max_grad_norm: 1.0num_train_epochs: 3max_steps: -1lr_scheduler_type: cosine_with_restartslr_scheduler_kwargs: {}warmup_ratio: 0.0warmup_steps: 100log_level: passivelog_level_replica: warninglog_on_each_node: Truelogging_nan_inf_filter: Truesave_safetensors: Truesave_on_each_node: Falsesave_only_model: Falserestore_callback_states_from_checkpoint: Falseno_cuda: Falseuse_cpu: Falseuse_mps_device: Falseseed: 42data_seed: Nonejit_mode_eval: Falsebf16: Truefp16: Falsefp16_opt_level: O1half_precision_backend: autobf16_full_eval: Falsefp16_full_eval: Falsetf32: Nonelocal_rank: 0ddp_backend: Nonetpu_num_cores: Nonetpu_metrics_debug: Falsedebug: []dataloader_drop_last: Falsedataloader_num_workers: 0dataloader_prefetch_factor: Nonepast_index: -1disable_tqdm: Falseremove_unused_columns: Truelabel_names: Noneload_best_model_at_end: Falseignore_data_skip: Falsefsdp: []fsdp_min_num_params: 0fsdp_config: {'min_num_params': 0, 'xla': False, 'xla_fsdp_v2': False, 'xla_fsdp_grad_ckpt': False}fsdp_transformer_layer_cls_to_wrap: Noneaccelerator_config: {'split_batches': False, 'dispatch_batches': None, 'even_batches': True, 'use_seedable_sampler': True, 'non_blocking': False, 'gradient_accumulation_kwargs': None}parallelism_config: Nonedeepspeed: Nonelabel_smoothing_factor: 0.0optim: adamw_torch_fusedoptim_args: Noneadafactor: Falsegroup_by_length: Falselength_column_name: lengthproject: huggingfacetrackio_space_id: trackioddp_find_unused_parameters: Noneddp_bucket_cap_mb: Noneddp_broadcast_buffers: Falsedataloader_pin_memory: Truedataloader_persistent_workers: Falseskip_memory_metrics: Trueuse_legacy_prediction_loop: Falsepush_to_hub: Falseresume_from_checkpoint: Nonehub_model_id: Nonehub_strategy: every_savehub_private_repo: Nonehub_always_push: Falsehub_revision: Nonegradient_checkpointing: Falsegradient_checkpointing_kwargs: Noneinclude_inputs_for_metrics: Falseinclude_for_metrics: []eval_do_concat_batches: Truefp16_backend: autopush_to_hub_model_id: Nonepush_to_hub_organization: Nonemp_parameters:auto_find_batch_size: Falsefull_determinism: Falsetorchdynamo: Noneray_scope: lastddp_timeout: 1800torch_compile: Falsetorch_compile_backend: Nonetorch_compile_mode: Noneinclude_tokens_per_second: Falseinclude_num_input_tokens_seen: noneftune_noise_alpha: Noneoptim_target_modules: Nonebatch_eval_metrics: Falseeval_on_start: Falseuse_liger_kernel: Falseliger_kernel_config: Noneeval_use_gather_object: Falseaverage_tokens_across_devices: Trueprompts: Nonebatch_sampler: batch_samplermulti_dataset_batch_sampler: proportionalrouter_mapping: {}learning_rate_mapping: {}

Training Logs

Click to expand

| Epoch | Step | Training Loss |

|---|---|---|

| 0.0066 | 10 | 5.0675 |

| 0.0131 | 20 | 5.0698 |

| 0.0197 | 30 | 5.0747 |

| 0.0262 | 40 | 5.1686 |

| 0.0328 | 50 | 5.0452 |

| 0.0394 | 60 | 5.0103 |

| 0.0459 | 70 | 5.0011 |

| 0.0525 | 80 | 5.0711 |

| 0.0591 | 90 | 5.0241 |

| 0.0656 | 100 | 4.8915 |

| 0.0722 | 110 | 5.031 |

| 0.0787 | 120 | 4.9899 |

| 0.0853 | 130 | 4.835 |

| 0.0919 | 140 | 4.9621 |

| 0.0984 | 150 | 4.8343 |

| 0.1050 | 160 | 4.9046 |

| 0.1115 | 170 | 4.7145 |

| 0.1181 | 180 | 4.799 |

| 0.1247 | 190 | 4.6537 |

| 0.1312 | 200 | 4.619 |

| 0.1378 | 210 | 4.5875 |

| 0.1444 | 220 | 4.5914 |

| 0.1509 | 230 | 4.5139 |

| 0.1575 | 240 | 4.4542 |

| 0.1640 | 250 | 4.3606 |

| 0.1706 | 260 | 4.3778 |

| 0.1772 | 270 | 4.3027 |

| 0.1837 | 280 | 4.2375 |

| 0.1903 | 290 | 4.1997 |

| 0.1969 | 300 | 4.1519 |

| 0.2034 | 310 | 4.0318 |

| 0.2100 | 320 | 3.9845 |

| 0.2165 | 330 | 3.9522 |

| 0.2231 | 340 | 3.8775 |

| 0.2297 | 350 | 3.852 |

| 0.2362 | 360 | 3.7913 |

| 0.2428 | 370 | 3.718 |

| 0.2493 | 380 | 3.6734 |

| 0.2559 | 390 | 3.5953 |

| 0.2625 | 400 | 3.5469 |

| 0.2690 | 410 | 3.5365 |

| 0.2756 | 420 | 3.3974 |

| 0.2822 | 430 | 3.3869 |

| 0.2887 | 440 | 3.3836 |

| 0.2953 | 450 | 3.3066 |

| 0.3018 | 460 | 3.2457 |

| 0.3084 | 470 | 3.1955 |

| 0.3150 | 480 | 3.1353 |

| 0.3215 | 490 | 3.0846 |

| 0.3281 | 500 | 3.0547 |

| 0.3346 | 510 | 2.925 |

| 0.3412 | 520 | 2.894 |

| 0.3478 | 530 | 2.827 |

| 0.3543 | 540 | 2.7675 |

| 0.3609 | 550 | 2.7378 |

| 0.3675 | 560 | 2.7029 |

| 0.3740 | 570 | 2.6941 |

| 0.3806 | 580 | 2.6109 |

| 0.3871 | 590 | 2.559 |

| 0.3937 | 600 | 2.5034 |

| 0.4003 | 610 | 2.468 |

| 0.4068 | 620 | 2.3859 |

| 0.4134 | 630 | 2.3422 |

| 0.4199 | 640 | 2.3226 |

| 0.4265 | 650 | 2.2064 |

| 0.4331 | 660 | 2.1447 |

| 0.4396 | 670 | 2.1366 |

| 0.4462 | 680 | 2.0387 |

| 0.4528 | 690 | 2.0422 |

| 0.4593 | 700 | 2.0372 |

| 0.4659 | 710 | 1.943 |

| 0.4724 | 720 | 1.9419 |

| 0.4790 | 730 | 1.8448 |

| 0.4856 | 740 | 1.8012 |

| 0.4921 | 750 | 1.7876 |

| 0.4987 | 760 | 1.7801 |

| 0.5052 | 770 | 1.7242 |

| 0.5118 | 780 | 1.675 |

| 0.5184 | 790 | 1.6643 |

| 0.5249 | 800 | 1.5671 |

| 0.5315 | 810 | 1.5788 |

| 0.5381 | 820 | 1.5244 |

| 0.5446 | 830 | 1.4709 |

| 0.5512 | 840 | 1.4927 |

| 0.5577 | 850 | 1.4416 |

| 0.5643 | 860 | 1.3888 |

| 0.5709 | 870 | 1.3499 |

| 0.5774 | 880 | 1.3941 |

| 0.5840 | 890 | 1.3458 |

| 0.5906 | 900 | 1.272 |

| 0.5971 | 910 | 1.3102 |

| 0.6037 | 920 | 1.2406 |

| 0.6102 | 930 | 1.2744 |

| 0.6168 | 940 | 1.193 |

| 0.6234 | 950 | 1.1719 |

| 0.6299 | 960 | 1.1651 |

| 0.6365 | 970 | 1.1368 |

| 0.6430 | 980 | 1.1108 |

| 0.6496 | 990 | 1.078 |

| 0.6562 | 1000 | 1.0485 |

| 0.6627 | 1010 | 1.077 |

| 0.6693 | 1020 | 1.0684 |

| 0.6759 | 1030 | 1.0077 |

| 0.6824 | 1040 | 1.0239 |

| 0.6890 | 1050 | 1.0173 |

| 0.6955 | 1060 | 0.9564 |

| 0.7021 | 1070 | 0.9656 |

| 0.7087 | 1080 | 0.931 |

| 0.7152 | 1090 | 0.9259 |

| 0.7218 | 1100 | 0.9225 |

| 0.7283 | 1110 | 0.9269 |

| 0.7349 | 1120 | 0.9215 |

| 0.7415 | 1130 | 0.9313 |

| 0.7480 | 1140 | 0.8937 |

| 0.7546 | 1150 | 0.8362 |

| 0.7612 | 1160 | 0.8669 |

| 0.7677 | 1170 | 0.8452 |

| 0.7743 | 1180 | 0.8577 |

| 0.7808 | 1190 | 0.8658 |

| 0.7874 | 1200 | 0.8164 |

| 0.7940 | 1210 | 0.7835 |

| 0.8005 | 1220 | 0.8487 |

| 0.8071 | 1230 | 0.8133 |

| 0.8136 | 1240 | 0.7967 |

| 0.8202 | 1250 | 0.7979 |

| 0.8268 | 1260 | 0.7847 |

| 0.8333 | 1270 | 0.7839 |

| 0.8399 | 1280 | 0.7853 |

| 0.8465 | 1290 | 0.8109 |

| 0.8530 | 1300 | 0.795 |

| 0.8596 | 1310 | 0.7547 |

| 0.8661 | 1320 | 0.7476 |

| 0.8727 | 1330 | 0.7205 |

| 0.8793 | 1340 | 0.7456 |

| 0.8858 | 1350 | 0.7237 |

| 0.8924 | 1360 | 0.7035 |

| 0.8990 | 1370 | 0.7214 |

| 0.9055 | 1380 | 0.7065 |

| 0.9121 | 1390 | 0.6964 |

| 0.9186 | 1400 | 0.7121 |

| 0.9252 | 1410 | 0.6969 |

| 0.9318 | 1420 | 0.6862 |

| 0.9383 | 1430 | 0.685 |

| 0.9449 | 1440 | 0.7056 |

| 0.9514 | 1450 | 0.6949 |

| 0.9580 | 1460 | 0.6807 |

| 0.9646 | 1470 | 0.6497 |

| 0.9711 | 1480 | 0.666 |

| 0.9777 | 1490 | 0.6498 |

| 0.9843 | 1500 | 0.6875 |

| 0.9908 | 1510 | 0.656 |

| 0.9974 | 1520 | 0.6613 |

| 1.0039 | 1530 | 0.6246 |

| 1.0105 | 1540 | 0.655 |

| 1.0171 | 1550 | 0.657 |

| 1.0236 | 1560 | 0.6602 |

| 1.0302 | 1570 | 0.6445 |

| 1.0367 | 1580 | 0.6322 |

| 1.0433 | 1590 | 0.6166 |

| 1.0499 | 1600 | 0.6297 |

| 1.0564 | 1610 | 0.6116 |

| 1.0630 | 1620 | 0.6136 |

| 1.0696 | 1630 | 0.5916 |

| 1.0761 | 1640 | 0.5943 |

| 1.0827 | 1650 | 0.5993 |

| 1.0892 | 1660 | 0.6135 |

| 1.0958 | 1670 | 0.6004 |

| 1.1024 | 1680 | 0.6194 |

| 1.1089 | 1690 | 0.6129 |

| 1.1155 | 1700 | 0.6048 |

| 1.1220 | 1710 | 0.5834 |

| 1.1286 | 1720 | 0.5922 |

| 1.1352 | 1730 | 0.5785 |

| 1.1417 | 1740 | 0.5823 |

| 1.1483 | 1750 | 0.5734 |

| 1.1549 | 1760 | 0.5811 |

| 1.1614 | 1770 | 0.5692 |

| 1.1680 | 1780 | 0.5707 |

| 1.1745 | 1790 | 0.5548 |

| 1.1811 | 1800 | 0.5642 |

| 1.1877 | 1810 | 0.5833 |

| 1.1942 | 1820 | 0.5718 |

| 1.2008 | 1830 | 0.566 |

| 1.2073 | 1840 | 0.5551 |

| 1.2139 | 1850 | 0.5514 |

| 1.2205 | 1860 | 0.5401 |

| 1.2270 | 1870 | 0.5588 |

| 1.2336 | 1880 | 0.5573 |

| 1.2402 | 1890 | 0.5503 |

| 1.2467 | 1900 | 0.5557 |

| 1.2533 | 1910 | 0.5424 |

| 1.2598 | 1920 | 0.5466 |

| 1.2664 | 1930 | 0.5508 |

| 1.2730 | 1940 | 0.5467 |

| 1.2795 | 1950 | 0.5132 |

| 1.2861 | 1960 | 0.5291 |

| 1.2927 | 1970 | 0.5243 |

| 1.2992 | 1980 | 0.5275 |

| 1.3058 | 1990 | 0.5483 |

| 1.3123 | 2000 | 0.522 |

| 1.3189 | 2010 | 0.5147 |

| 1.3255 | 2020 | 0.5062 |

| 1.3320 | 2030 | 0.5119 |

| 1.3386 | 2040 | 0.5184 |

| 1.3451 | 2050 | 0.5039 |

| 1.3517 | 2060 | 0.5283 |

| 1.3583 | 2070 | 0.5046 |

| 1.3648 | 2080 | 0.5343 |

| 1.3714 | 2090 | 0.4888 |

| 1.3780 | 2100 | 0.5241 |

| 1.3845 | 2110 | 0.5108 |

| 1.3911 | 2120 | 0.516 |

| 1.3976 | 2130 | 0.5101 |

| 1.4042 | 2140 | 0.5246 |

| 1.4108 | 2150 | 0.5045 |

| 1.4173 | 2160 | 0.5161 |

| 1.4239 | 2170 | 0.4788 |

| 1.4304 | 2180 | 0.5106 |

| 1.4370 | 2190 | 0.476 |

| 1.4436 | 2200 | 0.4804 |

| 1.4501 | 2210 | 0.4874 |

| 1.4567 | 2220 | 0.4802 |

| 1.4633 | 2230 | 0.5203 |

| 1.4698 | 2240 | 0.4944 |

| 1.4764 | 2250 | 0.4876 |

| 1.4829 | 2260 | 0.4912 |

| 1.4895 | 2270 | 0.476 |

| 1.4961 | 2280 | 0.4859 |

| 1.5026 | 2290 | 0.4505 |

| 1.5092 | 2300 | 0.4949 |

| 1.5157 | 2310 | 0.4947 |

| 1.5223 | 2320 | 0.4726 |

| 1.5289 | 2330 | 0.4549 |

| 1.5354 | 2340 | 0.4434 |

| 1.5420 | 2350 | 0.4546 |

| 1.5486 | 2360 | 0.4513 |

| 1.5551 | 2370 | 0.4672 |

| 1.5617 | 2380 | 0.4639 |

| 1.5682 | 2390 | 0.4575 |

| 1.5748 | 2400 | 0.4719 |

| 1.5814 | 2410 | 0.469 |

| 1.5879 | 2420 | 0.4521 |

| 1.5945 | 2430 | 0.4529 |

| 1.6010 | 2440 | 0.4834 |

| 1.6076 | 2450 | 0.4621 |

| 1.6142 | 2460 | 0.463 |

| 1.6207 | 2470 | 0.4837 |

| 1.6273 | 2480 | 0.4687 |

| 1.6339 | 2490 | 0.463 |

| 1.6404 | 2500 | 0.4518 |

| 1.6470 | 2510 | 0.4356 |

| 1.6535 | 2520 | 0.4459 |

| 1.6601 | 2530 | 0.4746 |

| 1.6667 | 2540 | 0.4518 |

| 1.6732 | 2550 | 0.4363 |

| 1.6798 | 2560 | 0.4604 |

| 1.6864 | 2570 | 0.4481 |

| 1.6929 | 2580 | 0.4525 |

| 1.6995 | 2590 | 0.4421 |

| 1.7060 | 2600 | 0.4265 |

| 1.7126 | 2610 | 0.4366 |

| 1.7192 | 2620 | 0.4444 |

| 1.7257 | 2630 | 0.4632 |

| 1.7323 | 2640 | 0.4587 |

| 1.7388 | 2650 | 0.4421 |

| 1.7454 | 2660 | 0.444 |

| 1.7520 | 2670 | 0.432 |

| 1.7585 | 2680 | 0.4491 |

| 1.7651 | 2690 | 0.4375 |

| 1.7717 | 2700 | 0.4425 |

| 1.7782 | 2710 | 0.4448 |

| 1.7848 | 2720 | 0.4526 |

| 1.7913 | 2730 | 0.4277 |

| 1.7979 | 2740 | 0.4399 |

| 1.8045 | 2750 | 0.4287 |

| 1.8110 | 2760 | 0.4185 |

| 1.8176 | 2770 | 0.4332 |

| 1.8241 | 2780 | 0.4272 |

| 1.8307 | 2790 | 0.4358 |

| 1.8373 | 2800 | 0.4337 |

| 1.8438 | 2810 | 0.4259 |

| 1.8504 | 2820 | 0.4497 |

| 1.8570 | 2830 | 0.4191 |

| 1.8635 | 2840 | 0.4286 |

| 1.8701 | 2850 | 0.4492 |

| 1.8766 | 2860 | 0.4306 |

| 1.8832 | 2870 | 0.4337 |

| 1.8898 | 2880 | 0.4314 |

| 1.8963 | 2890 | 0.448 |

| 1.9029 | 2900 | 0.4277 |

| 1.9094 | 2910 | 0.424 |

| 1.9160 | 2920 | 0.428 |

| 1.9226 | 2930 | 0.4297 |

| 1.9291 | 2940 | 0.4316 |

| 1.9357 | 2950 | 0.4436 |

| 1.9423 | 2960 | 0.4071 |

| 1.9488 | 2970 | 0.4108 |

| 1.9554 | 2980 | 0.4073 |

| 1.9619 | 2990 | 0.4289 |

| 1.9685 | 3000 | 0.4496 |

| 1.9751 | 3010 | 0.4236 |

| 1.9816 | 3020 | 0.4381 |

| 1.9882 | 3030 | 0.4109 |

| 1.9948 | 3040 | 0.4152 |

| 2.0013 | 3050 | 0.4137 |

| 2.0079 | 3060 | 0.3986 |

| 2.0144 | 3070 | 0.3965 |

| 2.0210 | 3080 | 0.4313 |

| 2.0276 | 3090 | 0.4331 |

| 2.0341 | 3100 | 0.4285 |

| 2.0407 | 3110 | 0.4441 |

| 2.0472 | 3120 | 0.4243 |

| 2.0538 | 3130 | 0.4157 |

| 2.0604 | 3140 | 0.4086 |

| 2.0669 | 3150 | 0.4282 |

| 2.0735 | 3160 | 0.4152 |

| 2.0801 | 3170 | 0.4172 |

| 2.0866 | 3180 | 0.4288 |

| 2.0932 | 3190 | 0.4062 |

| 2.0997 | 3200 | 0.4116 |

| 2.1063 | 3210 | 0.4197 |

| 2.1129 | 3220 | 0.4071 |

| 2.1194 | 3230 | 0.4073 |

| 2.1260 | 3240 | 0.4109 |

| 2.1325 | 3250 | 0.4383 |

| 2.1391 | 3260 | 0.4108 |

| 2.1457 | 3270 | 0.4011 |

| 2.1522 | 3280 | 0.4035 |

| 2.1588 | 3290 | 0.4307 |

| 2.1654 | 3300 | 0.4208 |

| 2.1719 | 3310 | 0.4041 |

| 2.1785 | 3320 | 0.3979 |

| 2.1850 | 3330 | 0.4002 |

| 2.1916 | 3340 | 0.4056 |

| 2.1982 | 3350 | 0.4198 |

| 2.2047 | 3360 | 0.4036 |

| 2.2113 | 3370 | 0.4353 |

| 2.2178 | 3380 | 0.4239 |

| 2.2244 | 3390 | 0.4004 |

| 2.2310 | 3400 | 0.4006 |

| 2.2375 | 3410 | 0.4101 |

| 2.2441 | 3420 | 0.4269 |

| 2.2507 | 3430 | 0.4261 |

| 2.2572 | 3440 | 0.4173 |

| 2.2638 | 3450 | 0.4402 |

| 2.2703 | 3460 | 0.4145 |

| 2.2769 | 3470 | 0.4161 |

| 2.2835 | 3480 | 0.4261 |

| 2.2900 | 3490 | 0.4079 |

| 2.2966 | 3500 | 0.3978 |

| 2.3031 | 3510 | 0.3916 |

| 2.3097 | 3520 | 0.4072 |

| 2.3163 | 3530 | 0.3966 |

| 2.3228 | 3540 | 0.3942 |

| 2.3294 | 3550 | 0.4018 |

| 2.3360 | 3560 | 0.3935 |

| 2.3425 | 3570 | 0.3886 |

| 2.3491 | 3580 | 0.4145 |

| 2.3556 | 3590 | 0.4053 |

| 2.3622 | 3600 | 0.3955 |

| 2.3688 | 3610 | 0.3994 |

| 2.3753 | 3620 | 0.4014 |

| 2.3819 | 3630 | 0.4036 |

| 2.3885 | 3640 | 0.407 |

| 2.3950 | 3650 | 0.3799 |

| 2.4016 | 3660 | 0.4 |

| 2.4081 | 3670 | 0.3964 |

| 2.4147 | 3680 | 0.4073 |

| 2.4213 | 3690 | 0.3909 |

| 2.4278 | 3700 | 0.4277 |

| 2.4344 | 3710 | 0.3893 |

| 2.4409 | 3720 | 0.3876 |

| 2.4475 | 3730 | 0.3905 |

| 2.4541 | 3740 | 0.4181 |

| 2.4606 | 3750 | 0.4039 |

| 2.4672 | 3760 | 0.4087 |

| 2.4738 | 3770 | 0.4034 |

| 2.4803 | 3780 | 0.4166 |

| 2.4869 | 3790 | 0.3807 |

| 2.4934 | 3800 | 0.4094 |

| 2.5 | 3810 | 0.4192 |

| 2.5066 | 3820 | 0.4167 |

| 2.5131 | 3830 | 0.3972 |

| 2.5197 | 3840 | 0.379 |

| 2.5262 | 3850 | 0.4003 |

| 2.5328 | 3860 | 0.3856 |

| 2.5394 | 3870 | 0.389 |

| 2.5459 | 3880 | 0.4066 |

| 2.5525 | 3890 | 0.3891 |

| 2.5591 | 3900 | 0.3839 |

| 2.5656 | 3910 | 0.4188 |

| 2.5722 | 3920 | 0.3821 |

| 2.5787 | 3930 | 0.4134 |

| 2.5853 | 3940 | 0.4149 |

| 2.5919 | 3950 | 0.4123 |

| 2.5984 | 3960 | 0.3925 |

| 2.6050 | 3970 | 0.4189 |

| 2.6115 | 3980 | 0.4144 |

| 2.6181 | 3990 | 0.4001 |

| 2.6247 | 4000 | 0.3972 |

| 2.6312 | 4010 | 0.3868 |

| 2.6378 | 4020 | 0.3963 |

| 2.6444 | 4030 | 0.4155 |

| 2.6509 | 4040 | 0.4055 |

| 2.6575 | 4050 | 0.3961 |

| 2.6640 | 4060 | 0.4101 |

| 2.6706 | 4070 | 0.396 |

| 2.6772 | 4080 | 0.3872 |

| 2.6837 | 4090 | 0.386 |

| 2.6903 | 4100 | 0.3717 |

| 2.6969 | 4110 | 0.397 |

| 2.7034 | 4120 | 0.4023 |

| 2.7100 | 4130 | 0.4019 |

| 2.7165 | 4140 | 0.4095 |

| 2.7231 | 4150 | 0.4092 |

| 2.7297 | 4160 | 0.4066 |

| 2.7362 | 4170 | 0.396 |

| 2.7428 | 4180 | 0.3928 |

| 2.7493 | 4190 | 0.393 |

| 2.7559 | 4200 | 0.3986 |

| 2.7625 | 4210 | 0.3779 |

| 2.7690 | 4220 | 0.3917 |

| 2.7756 | 4230 | 0.3849 |

| 2.7822 | 4240 | 0.3947 |

| 2.7887 | 4250 | 0.4006 |

| 2.7953 | 4260 | 0.4004 |

| 2.8018 | 4270 | 0.3827 |

| 2.8084 | 4280 | 0.3976 |

| 2.8150 | 4290 | 0.3877 |

| 2.8215 | 4300 | 0.3898 |

| 2.8281 | 4310 | 0.4024 |

| 2.8346 | 4320 | 0.3987 |

| 2.8412 | 4330 | 0.3911 |

| 2.8478 | 4340 | 0.3928 |

| 2.8543 | 4350 | 0.3822 |

| 2.8609 | 4360 | 0.3747 |

| 2.8675 | 4370 | 0.3974 |

| 2.8740 | 4380 | 0.3851 |

| 2.8806 | 4390 | 0.3983 |

| 2.8871 | 4400 | 0.417 |

| 2.8937 | 4410 | 0.4063 |

| 2.9003 | 4420 | 0.4019 |

| 2.9068 | 4430 | 0.4062 |

| 2.9134 | 4440 | 0.3901 |

| 2.9199 | 4450 | 0.3877 |

| 2.9265 | 4460 | 0.3725 |

| 2.9331 | 4470 | 0.3931 |

| 2.9396 | 4480 | 0.3822 |

| 2.9462 | 4490 | 0.3925 |

| 2.9528 | 4500 | 0.4192 |

| 2.9593 | 4510 | 0.3881 |

| 2.9659 | 4520 | 0.4004 |

| 2.9724 | 4530 | 0.4037 |

| 2.9790 | 4540 | 0.3993 |

| 2.9856 | 4550 | 0.4212 |

| 2.9921 | 4560 | 0.3766 |

| 2.9987 | 4570 | 0.3995 |

Framework Versions

- Python: 3.13.9

- Sentence Transformers: 5.2.0

- Transformers: 4.57.3

- PyTorch: 2.9.1+cu128

- Accelerate: 1.12.0

- Datasets: 4.3.0

- Tokenizers: 0.22.1

Citation

BibTeX

Sentence Transformers

@inproceedings{reimers-2019-sentence-bert,

title = "Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks",

author = "Reimers, Nils and Gurevych, Iryna",

booktitle = "Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing",

month = "11",

year = "2019",

publisher = "Association for Computational Linguistics",

url = "https://arxiv.org/abs/1908.10084",

}

MultipleNegativesRankingLoss

@misc{henderson2017efficient,

title={Efficient Natural Language Response Suggestion for Smart Reply},

author={Matthew Henderson and Rami Al-Rfou and Brian Strope and Yun-hsuan Sung and Laszlo Lukacs and Ruiqi Guo and Sanjiv Kumar and Balint Miklos and Ray Kurzweil},

year={2017},

eprint={1705.00652},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

- Downloads last month

- 15

Model tree for electroglyph/FictionBert

Base model

answerdotai/ModernBERT-base