Spaces:

Running

Running

A newer version of the Gradio SDK is available:

6.1.0

metadata

title: AI Assistant For Visually Impaired

emoji: 💬

colorFrom: yellow

colorTo: purple

sdk: gradio

sdk_version: 5.42.0

app_file: app.py

pinned: false

hf_oauth: true

hf_oauth_scopes:

- inference-api

license: mit

short_description: AI-Assistant-for-Visually-Impaired

tags:

- mcp-in-action-track-consumer

- building-mcp-track-consumer

Accessibility Voice Agent — MCP Tools

Track: mcp-in-action-track-consumer

Team: Team

Author: @subhash4face — Subhash Mankunnu

Author: Athira AR

Model Context Protocol (MCP) + Gradio 6 + HF Inference + ElevenLabs

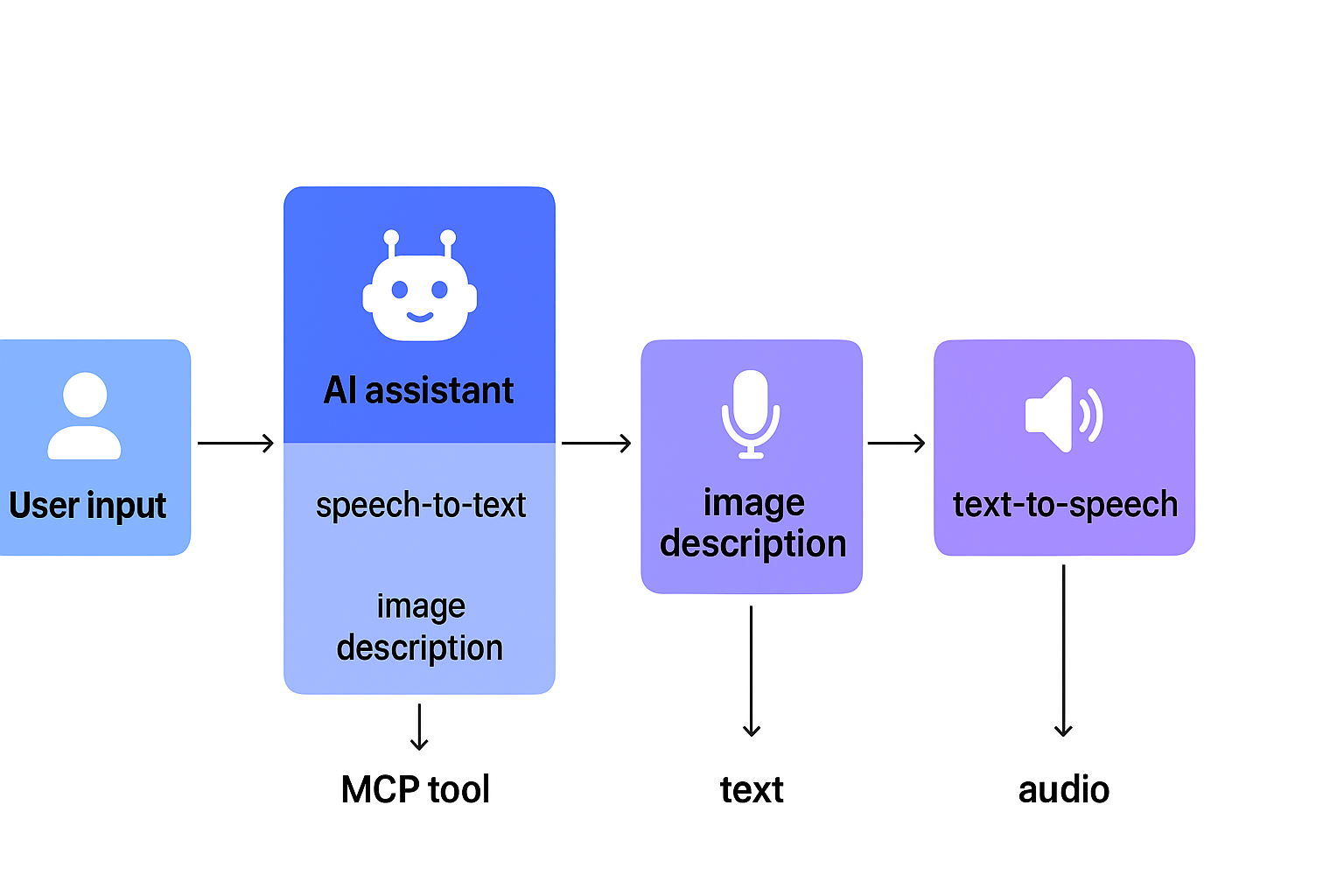

A fully accessible, voice-driven AI assistant demonstrating how MCP tools can enable speech-to-text, image understanding, and text-to-speech workflows for low-vision and visually impaired users.

This project showcases a real-world use case of MCP tools working together inside an agent-style UI.

🔄 Workflow Diagram — MCP Tools

🚀 Demo Video

👉 https://youtu.be/af4Y89g2HPE

🚀 Social Media Post - LinkedIn

🌟 Key Features

🔊 Text-to-Speech (TTS) via ElevenLabs

MCP Tool: speak_text

- Converts any assistant message to natural speech

- Returns base64 audio + WAV playback

- Helps low-vision users receive spoken responses

🎤 Speech-to-Text (STT) via Whisper / Local fallback

MCP Tool: transcribe_audio

- OpenAI Whisper STT or local fallback

- Great for hands-free usage

- Tool-call log shows backend + duration

🖼 Image Description via OpenAI / Gemini / HF Inference

MCP Tool: describe_image

- Multimodal accessibility

- Describes any uploaded image in plain language

- Hugging Face Inference API used instead of local BLIP

🧩 Fully MCP-powered

Every capability is wrapped as an MCP tool, making this app a template for:

- Agents

- Assistive technologies

- Multimodal accessibility apps

- Voice-driven workflows

- Cross-backend tool orchestration

💡 Real Use Case: Accessibility

Designed for:

- Low-vision users

- Voice-interface users

- Anyone needing automated image descriptions

- Hands-free workflows

- Assistive technology research

🛠 Tech Stack

- MCP Server (Python)

- Gradio 6

- OpenAI Whisper (STT)

- ElevenLabs (TTS)

- Gemini Vision (optional)

- Hugging Face Inference API (image captioning)

- Python

🏁 How to Run Locally

pip install -r requirements.txt

python app.py